How to clean data in Graylog and OpenSearch safely?

Huston, we have a problem! It was the first sentence that I wrote to my boss. One year ago, when I started to work in this company, I saw a big problem. We had many test systems on Linux, and many programs. Each one of these programs wrote many log files, and QA testers could read them only in the Linux filesystem.

Early I didn't work with Graylog, but the situation when you can see all the log files in one web interface was amazing. At the time I installed only Graylog-server, OpenSearch for logs, and MongoDB for settings saved. If you are interested in this theme, I have an article about installing and about setting up.

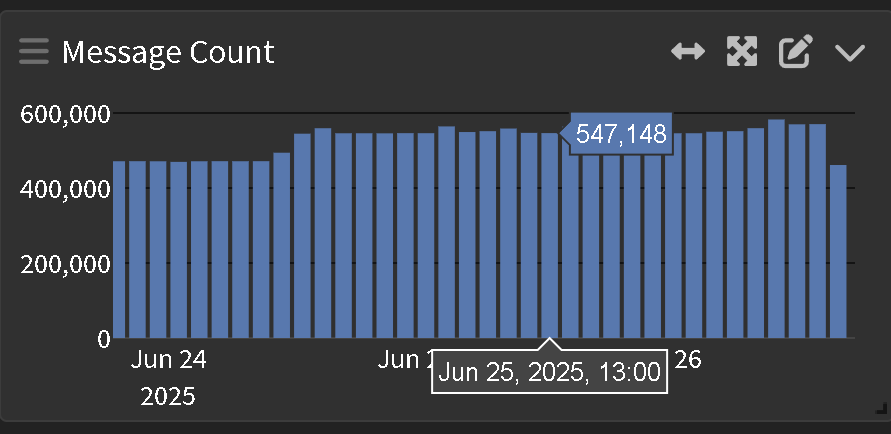

First I connected a few services, and all was good, but a year later we have more than 30 active services, and 50 000 000 new messages every week (300 000 per hour).

Of course, this count is too small for big product systems, but for my little Graylog-server it was critical. The retention period of the logs was set at two weeks, but four days ago, many services moved to the testing stage. Free space finished really quickly! Graylog server and other programs started lagging heavily, the lags stopped coming and everything was filled with red errors.

So, there are several ways to clean up data in Graylog and OpenSearch, we'll start with the safest one

How to clean data in Graylog web interface?

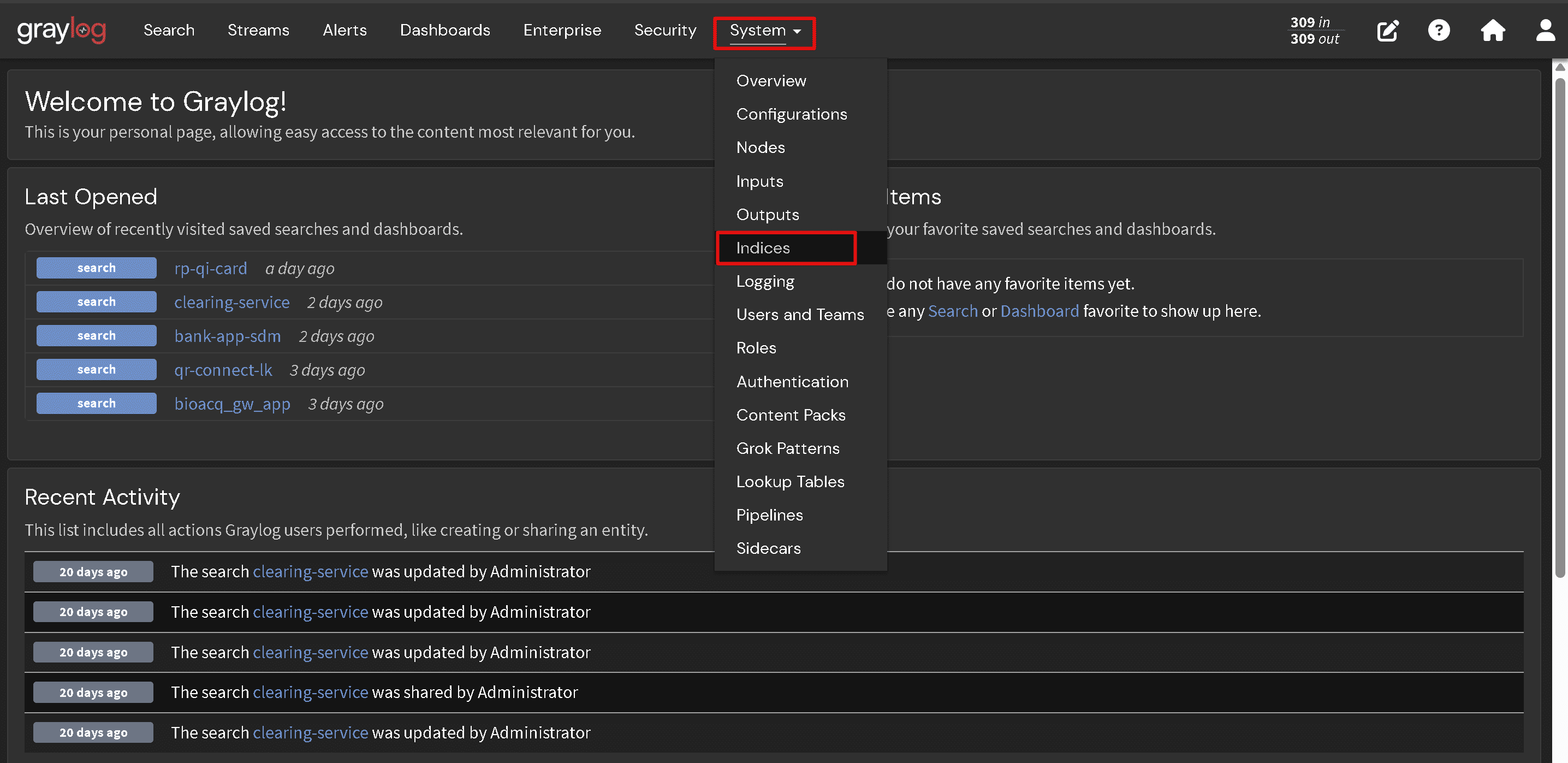

If you have managed to add at least a little memory to the server, or clean up anything unnecessary, this option will most likely work. Go to the Graylog web interface, then System => Indications.

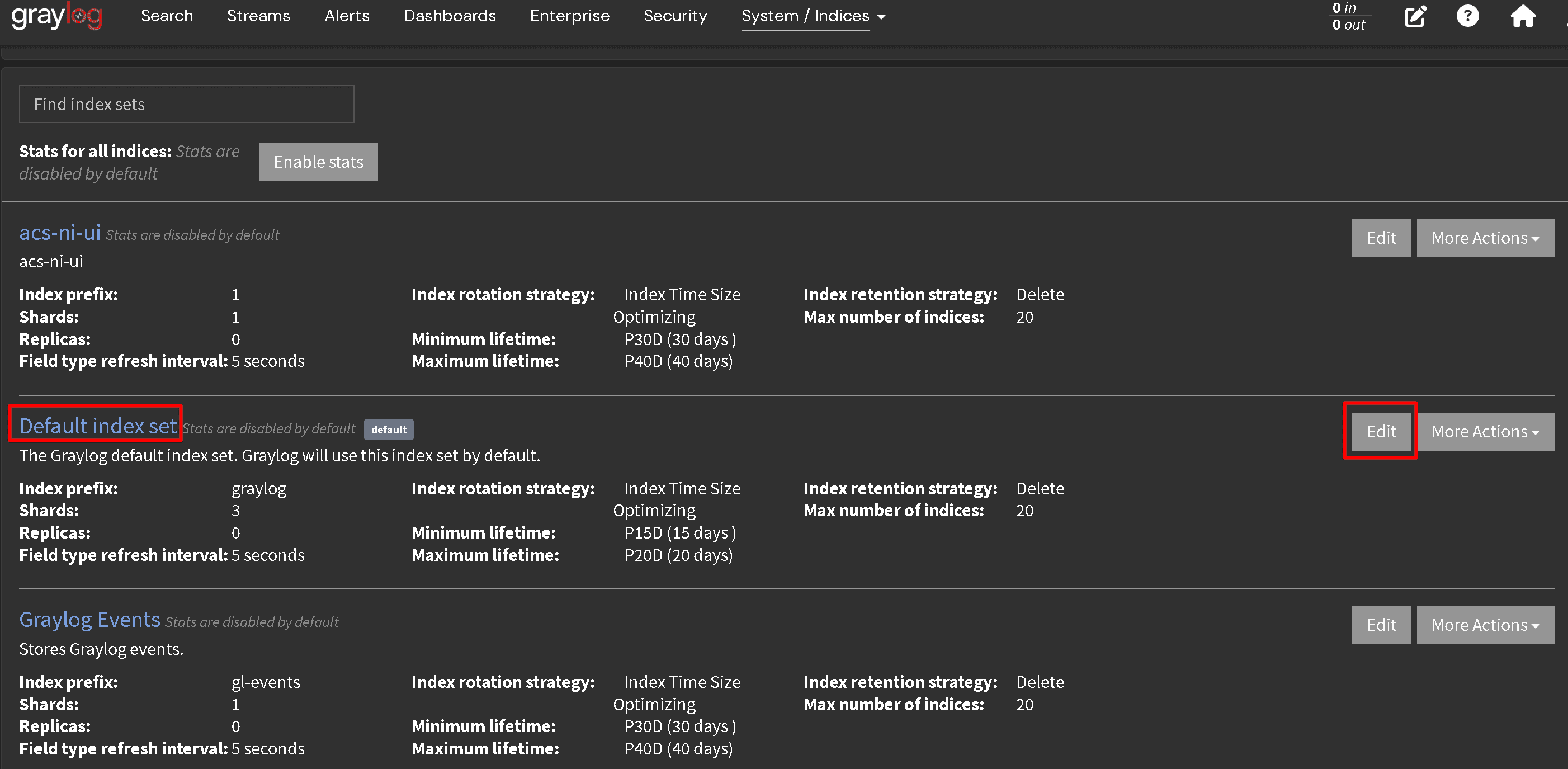

In my case, we choose the Default index set, since I did not split and all the data is stored in one main OpenSearch index (Edit, we'll press later 😉).

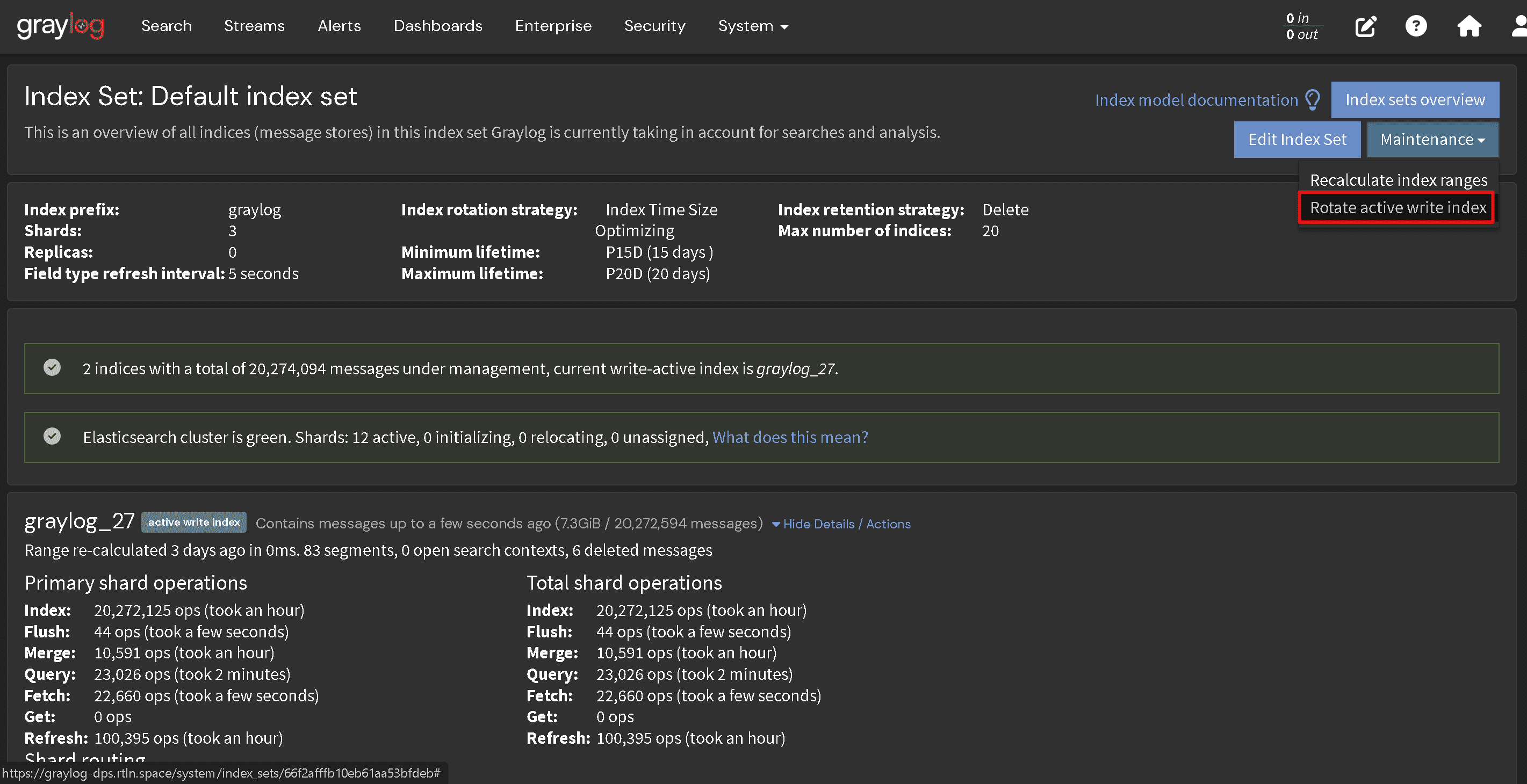

Here we see index with name graylog-27 and status active write index. When we press Rotate active write index (menu Maintenance, the system will create a new index and will start to write all new logs into it.

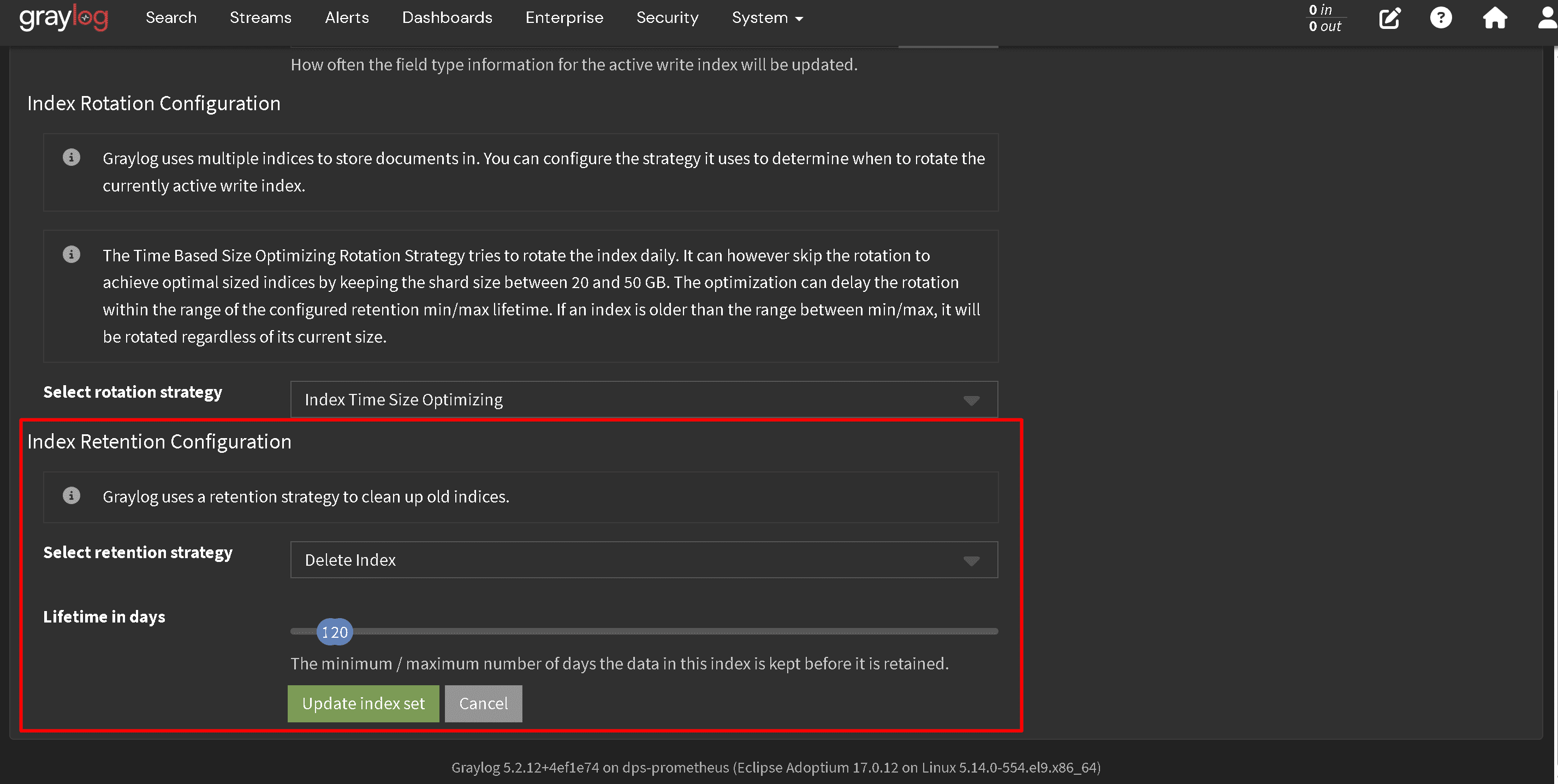

Then we can to clean old Graylog data and delete index graylog-27. Here we have a problem because we will be losing all old logs. To prevent this from happening again in the future, you can configure Index Retention (the same Edit button 👍). This setting is responsible for the retention period of logs and deletes those that fall outside the specified limits.

But in my case, situation was worse...

How to clean data in OpenSearch by API?

If OpenSearch is running, you can do the cleanup using its API. I did this using the curl command directly on the server where it is installed. For this query, we will get a list of existing indexes in OpenSearch sorted by occupied memory.

curl -XGET "http://localhost:9200/_cat/indices?v&s=store.size:desc"And with this query, we will delete the unnecessary index. Don't forget to replace {index_name} with the actual name.

curl -XDELETE "http://localhost:9200/{index_name}"Graylog server, having failed to detect the index, must create a new one with all the necessary nuances. But it's better to reboot it.

If we don't want to delete the index, we can try to clear it, in this case it is worth keeping in mind that OpenSearch sets the read_only limitation on all overflowing indexes. This query can help with such a situation

curl -X PUT "http://<opensearch-host>:9200/{index_name}/_settings" \

-H 'Content-Type: application/json' -d'

{

"index.blocks.read_only_allow_delete": null

}

'But of course it's better to just delete it, partial cleaning can be too difficult. Let's move on to the last most dangerous option.

How to clean data in Graylog by deleting OpenSearch indexes in the file system?

If OpenSearch has already stopped due to errors and is not working, that's good. Otherwise, stop it forcibly with the command.

sudo systemctl stop opensearchBy default, OpenSearch indexes are stored here /var/lib/opensearch/nodes/0/indices/ tested on CentOS. Let's see which of the indexes are the largest and delete them.

sudo du -h --max-depth=1 /var/lib/opensearch/nodes/0/indices/

sudo rm -rf /var/lib/opensearch/nodes/0/indices/{index_name}Launching OpenSearch back

sudo systemctl start opensearchIf it started at this point and everything is fine, then everything is fine. But more often there will be a mistake. OpenSearch has a hash and remembers about the deleted index, not finding it at startup, it crashes with an error. To fix this, you can clean the corresponding folders with the following commands.

sudo rm -rf /var/lib/opensearch/nodes/0/_state/*

sudo rm -rf /tmp/*At this point, you can start OpenSearch and, if it works, restart the Graylog server.

Everything should work, but as always with Linux, it's not for sure. 😅